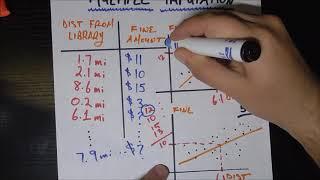

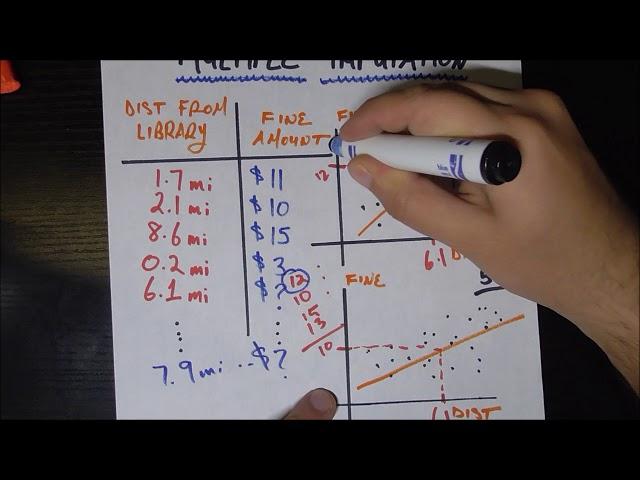

Dealing With Missing Data - Multiple Imputation

Комментарии:

oh my gosh thank you so much!

Ответить

Great explanation ! Thanks a lot

Ответить

Thank you very much :)

Ответить

Thanks very clear and useful!

Ответить

Thank you for producing this high-quaity video.

Ответить

This is so clearly explained. Thank you very much for this concise and informative video! I have a question. I believe the purpose of step 2 - calculating the standard deviation - is to confirm that the mean is a reliable one. What if the standard deviation is too large? Does it imply that the imputation method is not a reliable one and should not be adopted? Thank you!

Ответить

Very informative! Thank you, good sir :)

Ответить

How does PMM identify nearby candidates when there are a mixture of numeric and categorical variables? Thanks :)

Ответить

VERY clear explanation. Thank you!

Ответить

This is an amazing video. Thank you so much. Do we have to check the assumptions for linear regression for each model for each imputed variable?

Ответить

This is an outstanding explanation. Thank you so much for making this.

Ответить

It wasn’t apparent to me why this estimator would be less biased than a single imputation, you mentioned that doing multiple regressions and the aggregating ‘washes away the noise’ but each of your individual regressions would also be more noisy than a single regression that uses the whole dataset - so how do I know that in the aggregate they are less noisy than a single regression?

Ответить

Thanks for the practical example, not clear to me, at the end which value we have to use to fill in the missing value with the multiple imputation method. Could you please clarify?

Ответить

clearly explained! thanks a lot!

Ответить

very clear and easy to follow thanks, but will we not get as good a result by taking one regression sample of 250 data items, as opposed to five sets of fifty, then taking the mean of the means?

Ответить

Thanks for the great video! Question: suppose I have 5 different random samples with which I can get 5 regressions, and then \mu_1, ..., \mu_5, to find an aggregate mean \mu_A. Why not just pool those 5 data sets into one large data set and compute the grand mean \mu_B that way? Wouldn't my answer \mu_B be more precise (less variable) than just taking the average of the 5 means to get \mu_A?

Ответить

Wouldn't this be problematic if your objective with the dataset is precisely to demonstrate if there is any relationship (like a linear relationship) between those 2 variables? Filling a missing value through a method which assumes the very same linear realtionship you are trying to demosntrate would actually be begging the question, isn't it?

Ответить

Thank you very much.

Ответить

Thanks for the video! If the subsets are random, all the estimators are unbiased right? The aggregated estimator would just have lower variability.

Ответить

Data for 1.7 & 2.1 mi is not, prima faci true

Ответить

Sir,

you said we need from 5 to 10 models. How to calculate the exact needed number?

thank you

Very clear!

Ответить

Very helpful, thank you!

Ответить

I was struggling with the concept, but your video made it crystal clear to me, thanks

Ответить

This explanation is awesome! Congratulations!

Ответить

A thousand thanks, your explanation is very easy to understand, it's really helpful.

Ответить

Amazing sir. It's really helpful.

Ответить

Isn't it actually even more complicated than that? Isn't it that for each regression, instead of imputing the missing fine value with the value predicted by the regression we actually randomly sample from the distribution of fine values around that predicted value (the distribution of fine conditional on distance)? This adds even more of the uncertainty involved in the guess we are making to our imputation process.

Ответить

This was so clear and easy to understand! Thank you!

Ответить

Can you actually do standard deviation? Won't that just reduce the sd for each regression by adding a bunch of point that perfectly fit the regression?

Ответить

Great job!

Ответить

Great explanation, thanks.

I have done many retrospective clinical research projects and I have never dealt with missing data. I always left these blank knowing that they will automatically be excluded from analysis.

I believe leaving these missing data unfilled is better to avoid any chance of bias influenced by data of other patients in the study cohort.

What do you think?

Now looking at your clear video I am thinking about this approach as well for future projects.

I am not a statistician and I've done all these while in training.

thank you so much

Ответить

What we do with 5 imputations that have been calculated? which of them can be considered as the imputed value finally if we want just to show this as a graph?

Ответить

Can you do regression imputation next? I really loved this vid

Ответить

I just wish that you were more neat instead of writing everything on that one paper and you keep moving it and it isnt clear what you are referring to when you point your finger on the paper as you've written everything in every nook and corner of that paper.

Ответить

Great explanation and excellent in describing how multiple imputations! But I have a question to ask, how could I choose the final value for the imputation if there is 5 value? should I go average 5 of the value instead, or is there any better approach? Thank You

Ответить

Great explanation! But one that also seems at odds with what I'm reading from other sources, which make it sound like parameters in the model estimating the outcome are what get randomly selected for each iteration, not the observations used to make the prediction.

Is what I'm describing an alternative approach to the same thing, or am I misunderstanding the approach?

This was my aaahaaa moment. Thank you!

Ответить

TTTTHhHHHHhhaaaannnnnkkkkk yooooooooooooooooooooooooou very very very much

Ответить

~2~Thanks for the clear explanation. One thing I'm struggling to understand is when you are running multiple iterations, say 5, how are the different sets of data points generated? In your example, you fit lines among 50 data points. Do you randomly select 50 data points among those that have non-missing value in the raw dataset?

Ответить

Thank you!

Ответить

Awesome!

Ответить

Thanks you very much! love your videos, they were always clearly explained.

Ответить

Very very clear. Very helpful. Thank you!

Ответить

I found it confusing !! Especially you move the paper up n down when you talk!

Ответить

It would be great if you can share links to some of the papers or books that you refer here.

Ответить

thank you

Ответить

Thanks! That was a really nice explanation!!

Ответить

Thank you very much. Quick question, which imputed values do you end up leaving in the dataset for further analysis. Say now I want to impute values to be used later for a variety of machine learning applications. Surely, I cant use multiple imputation every time I want to implement a new machine learning model and measure a metric?

Ответить