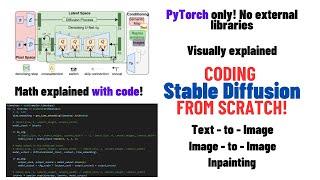

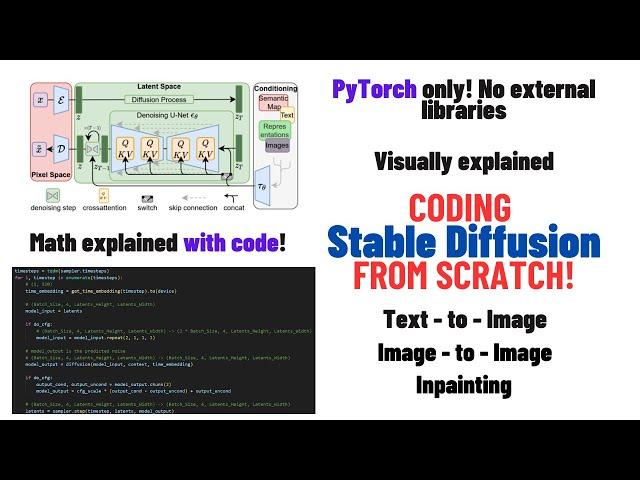

Coding Stable Diffusion from scratch in PyTorch

Комментарии:

As per my understanding , the loss function of an auto encoder is the KL Divergence loss. I dont know if I missed it the video but , I cant figure out where we have added the loss function .

Ответить

一开始听口音还以为是老印

Ответить

What nobody seems to explain is why CLIP model is chosen to produce embeddings. Everyone mentions how it was used to match images to text, but how is this relevant at all if we are using our VAE encoder which has nothing to do with image encoder they've used in CLIP?

Ответить

Really really good video. Can you create a video about tokenizer from scratch? Many thanks!

Ответить

Thank you so much! the best stable diffusion video I found!!!

Ответить

the most powerfull deep learning videos in the world are on this channel

Ответить

This is extremely helpful, can you please also make content for score base diffusion models and other more complex scheduling algorithms like Ranga kutta scheduling? Thanks a lot for your efforts

Ответить

Could you please make a video on how to train a stable diffusion model? e.g. how many images do we need to train it? what types of images should we collect?

Ответить

谢谢你,总算清楚sampler和unet之间的关系了

Ответить

Thanks!

Ответить

Really great video for understanding stable diffusion in detail. Thanks a lot for your contribution

Ответить

Amazing job my friend! I just got a job in ShenZhen China by learing it! Thank u so much mate. I hope u and ur family living a great in China :)

Ответить

pre-trained weights not working with the code you have provided.

Ответить

I wish the code size was larger to make it easier to read.

Ответить

In the Original Stable Diffusion Process, are the encoder and decoder components trained independently from the Noise Prediction U- Net architecture and then utilized as pre-trained models, where the architecture looks like Pre-trained Encoder + Noise Prediction U- Net + Pre-Trained Decoder (Note here Noise Prediction U- Net is not related to Pre-trained Encoder / Decoder before training combined Stable Diffusion )? or Are the Encoder, Noise Predictor, and Decoder trained together as a unified system, where they collectively learn patterns from the training images?

Ответить

I just discovered a great, wonderful, amazing, fantastic, gem channel 🎉🎉🎉

Ответить

It's the best explaination ever!!!! Thank you!

Ответить

ModuleNotFoundError: No module named 'pytorch_lightning'

Ответить

Awesome, This is the best explanation!!!

Ответить

Thank you!

Ответить

jesus I have base knowledge of AI and Statistics but you made me understand quite a lot of things thanks to your vid

Ответить

This is amazing video!! Great job!!!

Ответить

讲的非常不错!❤

Ответить

guoqing jie laojia😂chinese?vary good video, keep going,Thank you!

Ответить

So u didnt train the unet?

Ответить

this covers LoRA? can you make a video if not?

Ответить

Thanks Dear For helping Us , you Video's are very helpful

Ответить

Is this coding compatible with diffusers library? I fine-tuned the stable diffusion model for my dataset but I need a torch model for further changes but I couldn't capture the full dependencies from diffusers code.

Ответить

Great Work! Could you make a tutorial for ControlNet?

Ответить

almost karpathy level explanations, thank you!

Ответить

excellent video, full of information

Ответить

Is it possible to create Stable Doffusion alternative using Brian2 instead of PyTorch?

Ответить

Thanks again for the video. This is my second time watching this video. I can't help but notice that in the original latent diffusion paper, they were using vqgan to compress image into latent. Is the choice of VAE just for convenience?

Ответить

An extremely detailed video about diffusion. I have learned a lot. Thank you ❤❤❤

Ответить

What about training, I could not find a a training file in your github as well.

Ответить

Really appreciated, very informative.

Ответить

By far best explanation ❤

Ответить

Your code is so detailed and it runs on my enviorment just fine. Great job!!!👏

Ответить

Thank you so much for this amazing work!

Ответить

Great work. I love this so much. Which auto completion tool are you using in VScode btw?

Ответить

Why does the VAE encoder not use an activation between the two Convolution layers? Don't we need a nonlinearity?

Ответить

Subscribed ❤❤

Ответить

Thanks for this informative explanation. I was wondering in the demo file you took a pretrained model but you already built the model by your own, why don't you use that one, if I want to use how to do that? and Would you tell me how it will works for image conditioning without using the Clip text prompt? Thank you.

Ответить

fabulous! thank you very much!

Ответить

This is the best explanation of latent diffusion models I've seen

Ответить

Sir, if we want to pretrain a distilled version of stable diffusion how to do that.

Ответить

Great video! Really well made and informative.

On the sidenote.

Is somebody familiar with the correct VAE loss function? As i understand it consists of reproduction loss and KL terms. How should KL term be summed up over channels and batches? Also is there normalization for batches and training set?

Thanks for your video..I learn a lot..may I check how can I put Lora into it ? Thanks

Ответить