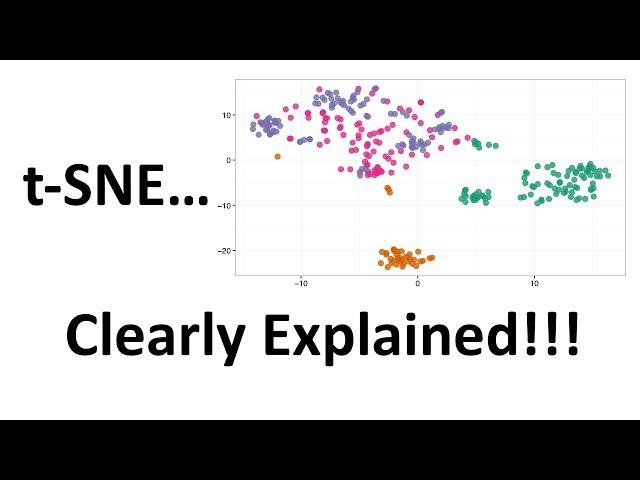

StatQuest: t-SNE, Clearly Explained

Комментарии:

StatQuest: t-SNE, Clearly Explained

StatQuest with Josh Starmer

I Fed Everyone At A Football Match

John Nellis

"GEORGIA ON MY MIND" - Mari Kerber & Ale Ravanello

Agenda Lírica

За максимальную комплектацию просят 31.000$, как думаете стоит того?

Procars - экспорт автомобилей из Китая

Rain Rain Go Away #shorts #littletreehouse #babymagic #cartoonvideos #kidssong #videosforbabies

Little Treehouse Nursery Rhymes Shorts

Boxing Training 13 May 2025

Samson Boxing

А СЧАСТЬЕ РЯДОМ ВСЕ СЕРИИ ПОДРЯД

Кино и многое другое

Тримайте порядок в галереї!

Ярослав Сохань

Как жизнь авторская песня Сергей Стасевич

Сергей Стасевич